CRIT Redux: Evaluating AI, Google, and Databases for research

Emily Wilt's great lesson on Evaluating AI when doing research

Author's Note: I saw this excellent lesson posted online and reached out to Emily Wilt to see if you would write about it here and share it with all of you. Below is all written by Emily

Have you ever met a teenager who will listen to you lecture at length, especially about what not to do, and then dutifully follow your instructions to a T?

No?

I haven’t either.

From my students’ perspectives, generative AI is a shortcut to the instant gratification of checking an assignment off their to-do lists so they can get back to whatever they actually care about doing. Very few of them are concerned about the long-term disadvantages of outsourcing critical thinking and analysis; they’re worried about living in the now.

I, on the other hand, am immensely concerned with the long-term consequences of outsourcing critical thinking, especially when the tools we’re outsourcing to are notoriously inconsistent in providing credible information.

So, what’s a high school librarian, given a grand total of 90 minutes with her students for research instruction, to do?

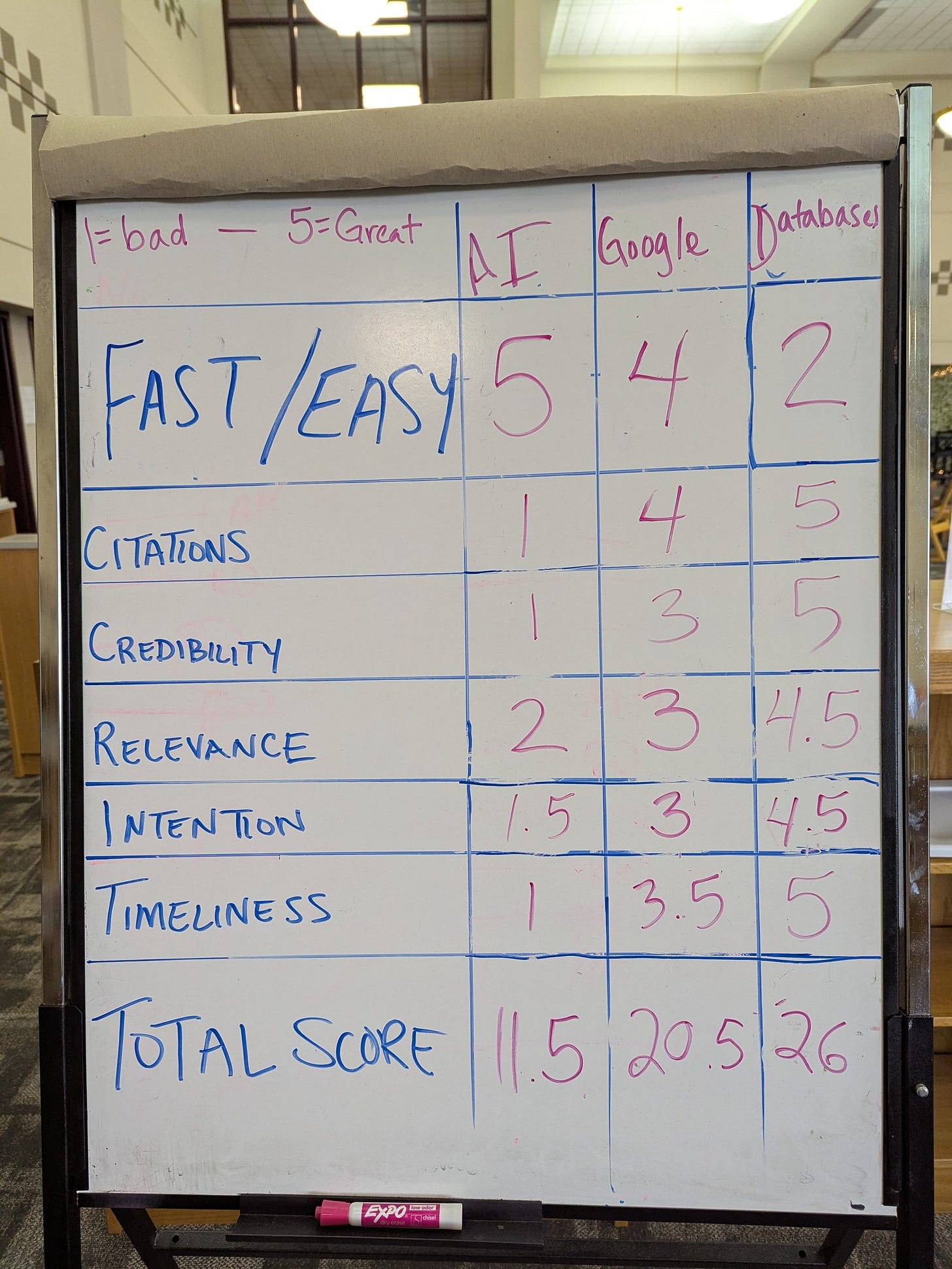

Several years ago now, a colleague and I, tired of the old CRAAP source evaluation approach, came up with a new acronym: CRIT, which stands for Credibility, Relevance, Intention, and Timeliness. Since CRIT’s inception, we have been teaching students how to evaluate information using this approach.

This year, I thought: why not evaluate our tools using this approach as well? Why not guide students through an exercise where they use CRIT to come to their own conclusions about why generative AI is not a good tool for academic research, rather than lecturing them and having them tune me out? Seems like a win-win to me.